We have compiled most frequently asked SQL Server Interview Questions which will help you with different expertise levels.

SQL Server Interview Questions on Integration Seruices?DTS

Note We had seen some questions on DTS in the previous chapter "Data Warehousing".

But in order to just make complete justice with this topic, I have included them in integration services.

Question 1.

What is Integration Services import/export wizard?

Answer:

Note What is DTS Import /Export Wizard? Note Try to do this practice as it can be useful if the interviewer wants to visualize the whole stuff.

DTS import/export wizard lets us import and export from external data sources. There are seven steps that you can just go through of how to use the wizard.

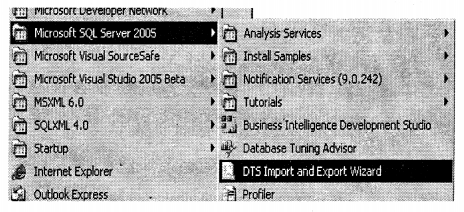

You can find DTS import and export wizard as shown below.

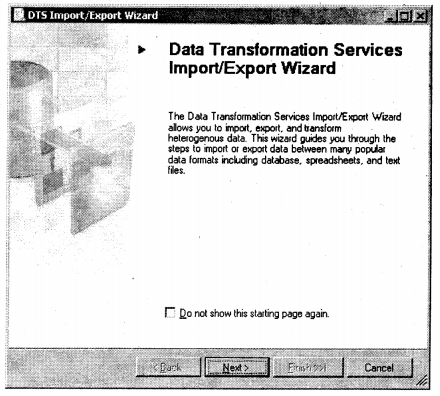

You will be popped with a screen as below click “Next’

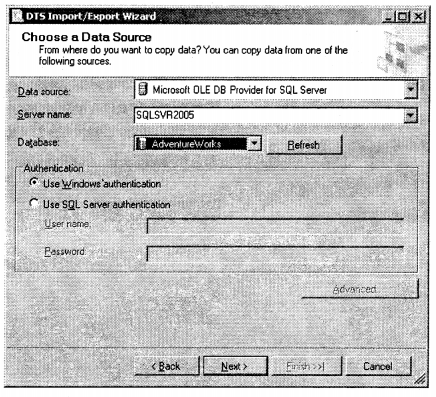

The next step is to specify from which source you want to copy data. You have to specify the Data source name and server name. For the understanding purpose, we are going to move data between “AdventureWorks” databases. I have created a dummy table called “SalesPersonDummy” which has the same structure as that of the “Salesperson” table. But the only difference is that “SalesPersonDummy” does not have data.

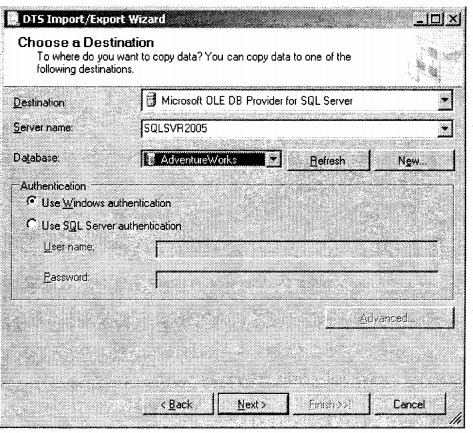

The next step is to specify the destination where the source will be moved. At this moment we are moving data inside “AdventureWorks” itself so specify the same database as the source.

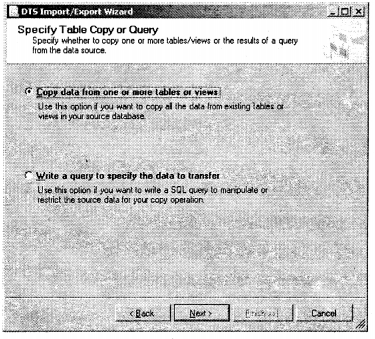

The next step is to specify the option from where you want to copy data. For the time being, we going to copy from the table so selected the first option.

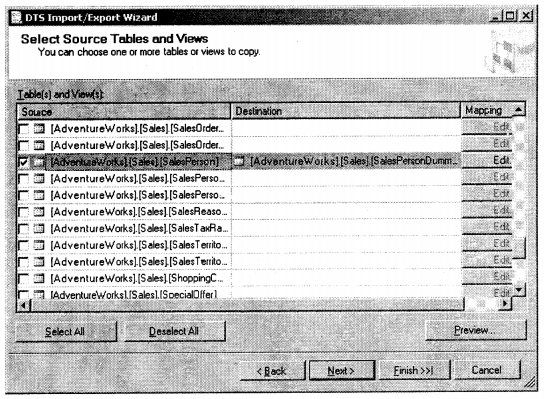

Finally, choose which object you want to map where. You can map multiple objects if you want.

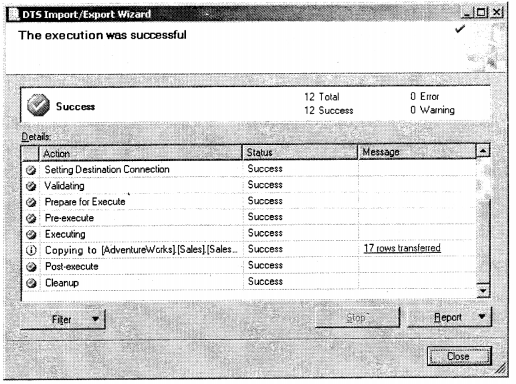

When everything goes successful you can see the below screen, which shows the series of steps DTS has gone through.

Question 2.

What arc prime components in Integration Services?

Answer:

There are two important components in Integration services:-

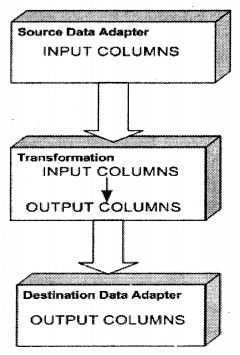

- DTP ( Data transformation pipeline)

DTP is a bridge that connects the source (CSV, any other Database, etc) and the destination (SQL Server Database). While DTP moves data between source and destination, transformation takes place between input and output columns. Probably some columns will go as one-to-one mapping and some with some manipulations.

- DTR ( Data transformation runtime)

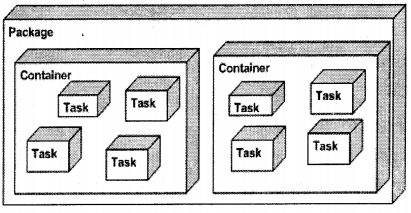

While DTP acts as a bridge DTR controls your integration service. They are more about how will be the workflow and different components during transformation. Below are different components associated with DTR:-

- Task: – It’s the smallest unit that you want to execute.

- Container: – Container logically groups task. For instance, you have a task to load

- CSV file into the database. So you will have two or three tasks probably:-

- Parse the CSV file.

- Check for field data type

- Map the source field to the destination.

So you can define all the above work as tasks and group them logically into a container called a Container.

- Package: – Package is executed to actually do the data transfer.

DTP and DTR model expose API which can be used in .NET language for better control.

Note I can hear the shout practical.. practical I think I have con fused you guys over there.

So let's warm-up on some practical DTS stuff. 1000 words is equal to one compiled program -

Shivprasad Koirala? I really want to invent some proverbs if you do not mind it.

Question 3.

How can we develop a DTS project in Integration Services?

Answer:

Twist: – Can you say how have you implemented DTS in your project and for what?

Note: – We had visited DTS import/export wizard in the previous section of this chapter. But for a real data transformation or a data warehousing (ETL process), it’s not enough. You will need to customize the project, there’s where we can use this beautiful thing called a “BI development project”. If possible just try to go step by step in creating this sample project.

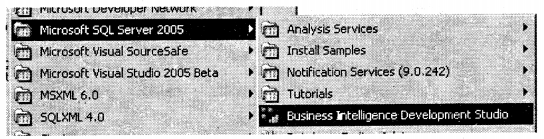

You can get the development studio as shown below.

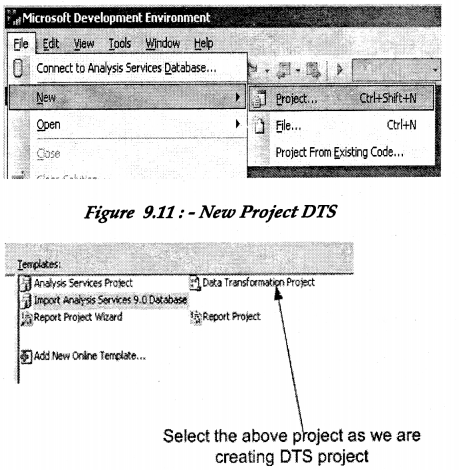

Click File—New – Project and select “Data Transformation Project”.

Give a name to the project as a “Salesperson” project. Before moving ahead let me give a brief about what we are trying to do. We are going to use “Sales.SalesPerson” table from the “AdventureWorks” database. “Sales. Salesperson” table has a field called “Bonus”. We have the following task to be accomplished:-

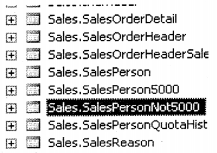

Note These both tables have to be created manually by you. I will suggest to use the create statements

and just make both tables. You can see in the image below there are tzvo tables "SalesPerson5000"

and "SalesPersonNot5000".

- Whenever the “Bonus” field is equal to 5000 it should go in”Sales.Salesperson5000″.

- Whenever the “Bonus” field is not equal to 5000 it should go in “Sales. Salesperson Not5000”.

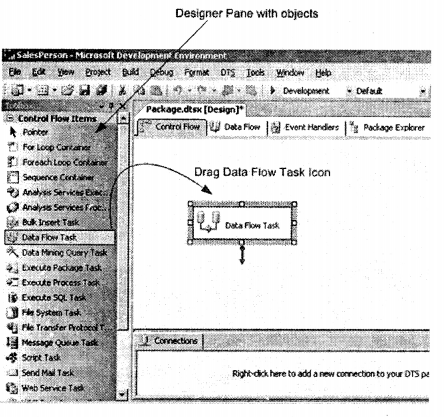

Once you selected the “Data transformation project”, you will be popped with a designer explorer as shown below. I understand you must be saying it’s cryptic…it is. But let’s try to simplify it. On the right hand, you can see the designer pane which has a lot of objects on it. On the right-hand side, you can see four tabs (Control flow, Data Flow, Event handlers, and Package Explorer).

Control flow: – It defines how the whole process will flow. For example, if you loading a CSV file. Probably you will have tasks like parsing, cleaning, and then loading. You can see a lot of control flow items that can make your data mining task easy. But first, we have to define a task in which we will define all our data flows. So you can see the curve arrow which defines what you have to drag and drop on the control flow designer You can see the arrow tip which defines the output point from the task.

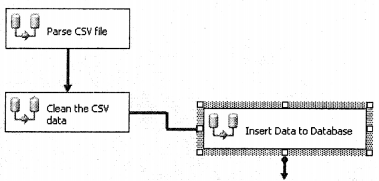

In this project, I have only defined one task, but in the real-time project, something below like this can be seen (Extraction, Transformation, and Loading: – ETL). One task points as an input to another task and the final task inputs data in SQL Server.

Data Flow: – Data flow says how the objects will flow inside a task. So Data flow is a subset of a task defining the actual operations.

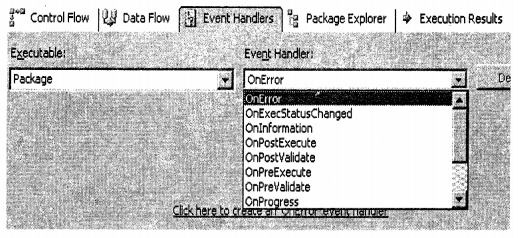

Event Handlers: – The best part of DTS is that we can handle events. For instance, if there is an error what action do you want it to do. Probably log your errors in the error log table, flat file, or be more interactive send a mail.

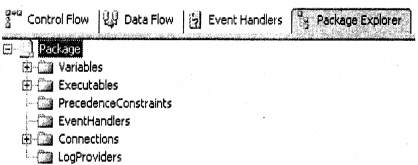

Package Explorer: – It shows all objects in a DTS in a hierarchical way.

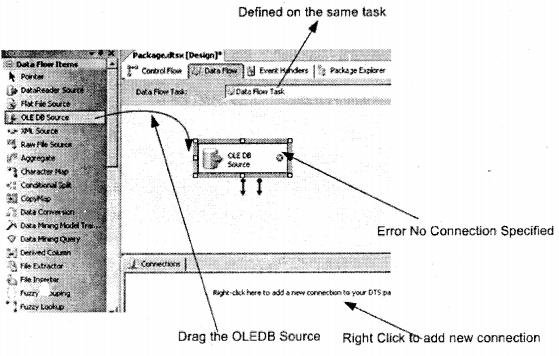

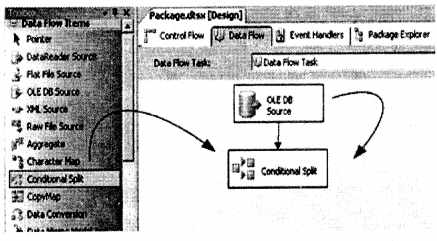

Now that you have defined your task it’s time to define the actual operation that will happen within the task. We have to move data from “Sales.SalesPerson” to “Sales.SalesPerson5000” (if their “Bonus” fields are equal to 5000) and “Sales.SalesPersonNot5000” (if their “Bonus” fields are not equal to 5000). In short, we have “Sales. The salesPerson” as the source and the other two tables as Destination. So click on the “Data Flow” tab and drag the OLEDB Source data flow item on the designer, we will define the source in this item. You can see that there is some error which is shown by a cross on the icon. This signifies that you need to specify the source table that is “Sales. Salesperson”.

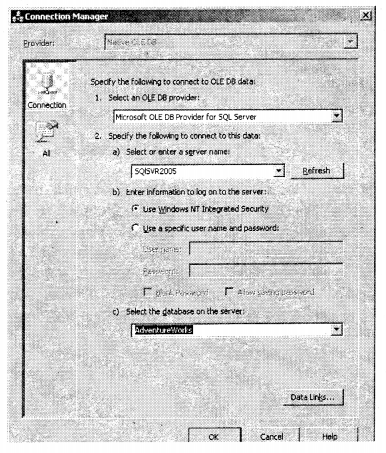

In order to specify source tables, we need to specify connections for the OLEDB source. So right-click on the below tab “Connections” and select “New OLEDB Connection”. You will be popped up with a screen as shown below. Fill in all details and specify the database as “AdventureWorks” and click “OK”.

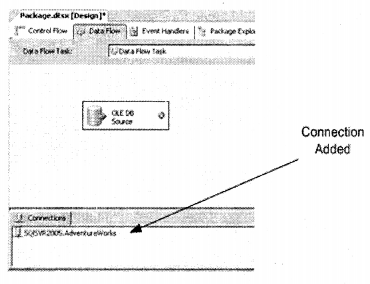

If the connection credentials are proper you can see the connection in the “Connections” tab as shown in the below figure.

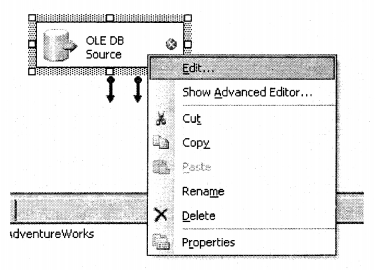

Now that we have defined the connection we have to associate that connection with the OLE DB source. So right dick and select the “Edit” menu.

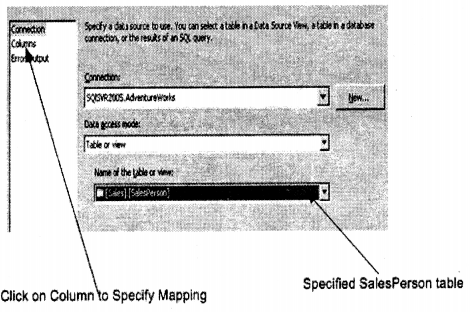

Once you click edit you will see a dialog box as shown below. In data access mode select “Table or View” and select the “Sales. Salesperson” table. To specify the mapping click on the “Columns” tab and then press ok.

If the credentials are ok you can see the red Cross is gone and the OLE DB source is not ready to connect further. As said before we need to move data to appropriate tables on the condition that “Bonus” field value. So from the data flow item drag and drop the “Conditional Split” data flow item.

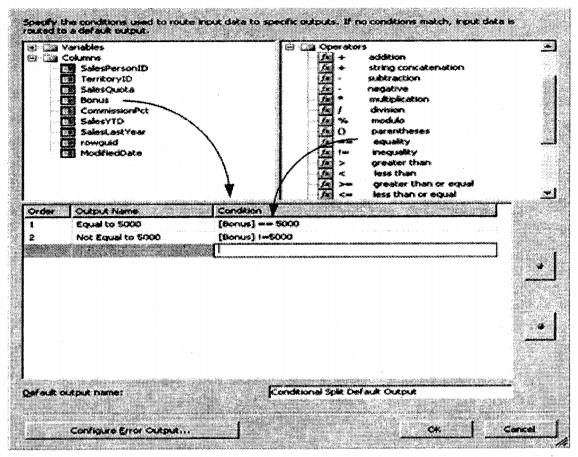

Right-click on the “Conditional Split” data flow item so that you can specify the criteria. It also gives you a list of fields in the table which you can drag-drop. You can also drag-drop the operators and specify the criteria. I have made two outputs from the conditional split one which is equal to 5000 and the second not equal to 5000.

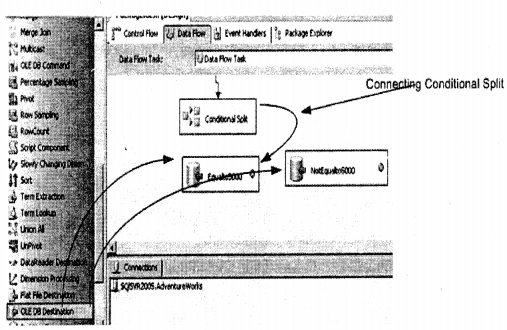

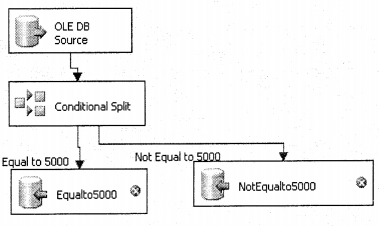

Conditional split now has two outputs one which will go in “Sales.SalesPerson5000” and the other in “Sales.SalesPersonNot5000”. So you have to define two destinations and the associate respective tables to them. So drag two OLE DB destination data flow items and connect them to the two outputs of the conditional split.

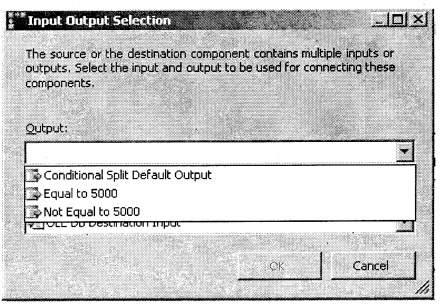

When you drag from the conditional split items over OLEDB destination items it will pop up a dialog to specify which output this destination has to be connected to. Select the one from the drop-down and press ok. Repeat this step again for the other destination object.

That’s the final Data flow structure expected.

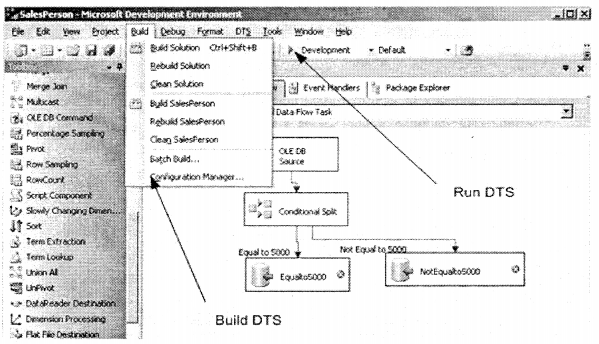

It’s time to build and run the solution which you can do from the drop-down. To run the DTS you press the green icon as pointed by the arrow in the below figure. After you run the query both the tables have the appropriate values or not.

Note You can see various dataflow items on the right-hand side; it’s out of the scope to cover all items ( You must be wondering hoiv much time this author will say out of scope, but it’s a fact guys something you have to explore). In this sample project, we needed the conditional split so we used it. Depending on projects you still need to explore the toolbox. It’s rare that an interviewer will ask about individual items but rather ask fundamentals or a general overview of how you did DTS.